This blog post is a text version of a talk I gave at Lambda World Cádiz on October 25, 2018, for those who couldn't attend and don't like watching video recordings. Thanks a lot to Jan Stępień for encouraging me to publish a text version of my talk.

In this article, I want to share my thoughts on a trend I have been observing for a few years. It's a trend that probably exists in the strongly typed functional programming community at large, and to some extent, it's possibly just a specific shape of a general trend in the realm of software development. But I have mainly observed it in the Scala community, because that's where I spend most of my time. I want to talk about how we often cause more complexity than necessary with the methods we choose. In fact, it seems to me as if we sometimes cherish complexity instead of fighting it. So let's see why that's the case and what we could do about it.

While this blog post is based on my experience in the Scala community, you don't have to be fluent with Scala in order to take something away from it. Before we can have a conversation about what's wrong with our relationship to complexity, though, it's necessary to talk about what complexity actually is. After that, I will go into more detail about the phenomemon I call the complexity trap.

What is complexity?

When we say that something is "complex", what it means, according to the dictionary, is that it's "hard to separate, analyze, or solve". The word originates from the Latin verb "complectere", which means "to braid". Consider a bunch of modules that are completely entangled — so much so that you can neither reason about them individually any more nor change one of them without touching the other as well. That's a pretty good example of complexity in software development.

But it also helps to look at the origin of the word "simple" — the opposite of "complex". "Simple" has its roots in the Latin adjective "simplex", which means "single" or "having one ingredient". In software development, we have a few principles that aim to achieve simplicity in this original sense. Two prominent examples of that are The Single Responsibility Principle and Separation of Concerns.

On such separation of concerns that we really love to enforce as functional programmers is the separation between effects and business logic. If these two concerns are not separated, it makes our code harder to reason about and more difficult to test. Hence, we try to isolate business logic from effects and implement the former as pure functions.

In his classic paper "No Silver Bullet", Fred Brooks identified two different types of complexity. Essential complexity is inherent in the problem domain. We cannot remove it because it stems from the very problem that we are supposed to solve with our software. Accidental complexity, on the other hand, is of our own making. This is complexity that is created by software developers, and in principle, it's possible to remove complexity of this type.

According to Brooks, we spend most of our time tacking essential complexity these days, and there is not much accidental complexity left. This means that reducing accidental complexity further will not yield a lot of benefit. But that statement is from 1986. Today, it's 2018, and I beg to disagree. I think we are spending a crazy amount of time managing accidental complexity, and we should put our focus much more on minimizing it.

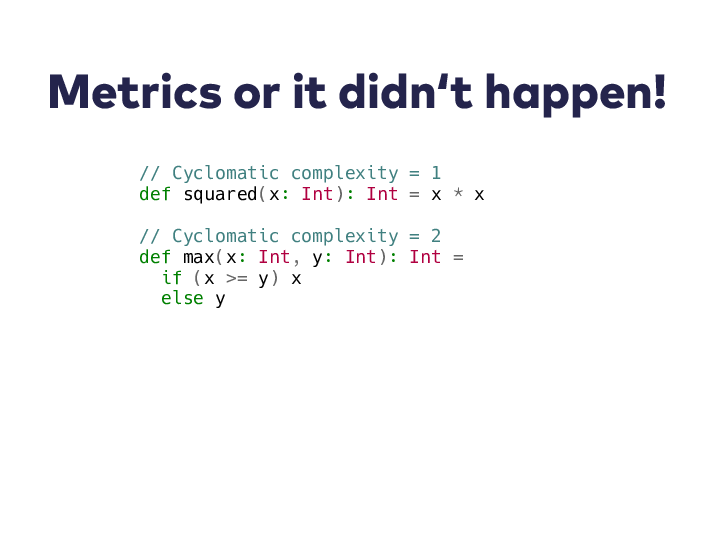

Over time, various metrics for complexity have been created, especially, but not exclusively, for code complexity. The most well-known of those metrics is probably McCabe's cyclomatic complexity: The more different code paths there are in a function, the higher its complexity. So one of the things that increased a function's complexity is branching, for example by means of if expressions of pattern matching expressions. The higher the cyclomatic complexity of a function, the more test cases are necessary to achieve full path coverage.

Of course, there are other relevant metrics related to complexity in software development. Complexity can be measured at different levels, not only at the code level. For example, we can measure the extent of coupling between modules. The more dependencies there are between the modules of a system, the higher its overall complexity.

But let's come back to cyclomatic complexity. It's certainly okay as a metric of how hard it is to test a function. However, I am actually more interested in how difficult it is to reason about what a function or a program is doing — or to reason about a system or an entire system of systems. In other words, I am interested in cognitive complexity.

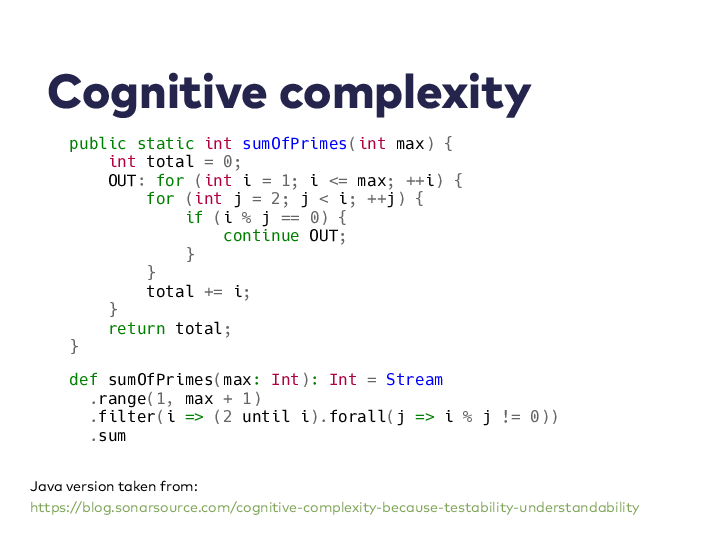

In the imperative version of the sumOfPrimes function, a lot of complexity stems from mutable state and flow control statements. The functional solution doesn't have any of that. So you could say that we are a bit at an advantage as functional programmers. However, we shouldn't be too smug about it either.

What I find interesting is that Sonarsource actually created a metric for the cognitive complexity of code. However, I think that not every aspect of what constitutes cognitive complexity can easily be captured in metrics. For example, syntax, boilerplate, indirections can all add to a program's cognitive complexity as well.

But why is it so important to keep complexity in check anyway? Sadly, we human beings are incredibly bad at juggling with many things at once. According to a classic study by George Miller, a cognitive psychologist, human beings can only hold seven items in working memory at the same time, plus or minus two, on average. So the more concerns are entangled, the more code paths there are, and the more syntax and boilerplate there is, the more difficult it will be for us to reason about a program.

In order to mitigate this, we use abstractions. Ideally, our programs are composed of many small functions, and we put related functions into the same module. In order to reduce coupling and the complexity of the whole system, we hide implementation details of our modules from others that depend on them.

In functional programming, we take abstraction much further than in other paradigms. We often abstract over things like iteration or data types in a way that is rarely seen outside of functional programming. This faciliates the definition of very simple functions that can be composed in many different ways — which is exactly what allowed us to write our sumOfPrimes function in a way that is much easier to reason about than the imperative version.

The complexity trap and how to escape it

Now that we have achieved a common understanding of what I mean when I talk about complexity, let me introduce you to my notion of the complexity trap. It might be fair to say that there is not a single complexity trap but rather multiple traps, even though they are all related to each other in one way or another.

The first trap that I want to talk about is neglecting the costs. We often seem to ignore the costs of the approaches and technique we choose to implement our programs. Usually, the intentions are good: Often, we choose a technique because we explicitly want to reduce the complexity in our code base. But sometimes, doing so comes with a cost — and that cost can even be new complexity at a different level, which might be worst than what we had before.

Let me illustrate this with an example. In general, scrap your boilerplate is a very desirable principle, because the more boilerplate code we have to write, the higher the complexity of our program and the higher the chance that we introduce bugs that could easily be avoided.

There is one specific way of scrapping boilerplate that is as popular in the Scala community as it is problematic. In Scala, the way that you encode values of a certain type to JSON and back is often defined via typeclasses. Instances of these typeclasses can often be derived automatically for arbitrary data and record types with a single line of code. This feature is heavily used in many Scala projects.

However, people rarely seem to consider the cost-value ratio of the way they use these automatically derived JSON codecs: In almost all projects I have seen, people use automatically derived JSON codecs to expose their internal domain model to the outside world.

Doing that certainly means that you scrap quite a bit of boilerplate and reduce the complexity in your code. However, if you look at the bigger picture, this is the opposite of removing complexity. Exposing your domain model like this almost always leads to strongly coupled systems that are very difficult to evolve. If you ask me, this is one of the most promising ways to create a distributed monolith. It really amazes me how many people are totally okay with simply making their internal model their public API, especially since functional programmers are usually crazy about abstractions.

Apparently, we are even crazier about removing boilerplate. However, there are alternatives. If you don't want to expose your domain model, but you want to avoid having to define your JSON codecs manually, another way to decouple your model and your API is to introduce a DTO layer. This is an abstraction layer that is often ridiculed these days While it would allow you to use automatically derived JSON codecs for your separate DTO type hierarchy, it would still be necessary to implement a mapping between your domain model and your DTOs. You will probably end up with as much boilerplate code as if you had manually defined your JSON codecs for your domain model types.

In the end, you have to choose your poison. Simply exposing your domain model with automatically derived JSON codecs is probably the most addictive poison, because it gives you instant gratification. In the long run, however, you will almost certainly have to pay the price.

There is a variant of neglecting the costs that deserves to be discussed on its own. In many projects I have seen, an approach or technology is picked because it is being perceived as common courtesy. The reasoning is that "this is what you do in 2018". If you ask people why they use approach or technology X, one of the most common reactions is that they are completely baffled to even hear such a question. So, in a slightly anxious and reproachful tone, they answer with a counter question: "Well, isn't that industry standard?"

You know what's industry standard? Shipping containers! However, when people say "industry standard", what they actually refer to is often just the latest fad — something that will definitely not be industry standard any more in a couple of years. If we follow this approach, it means that we adopt techniques without thinking. We don't think about their costs, and we certainly don't think about whether the benefits of that technique can even be realized in our specific situation.

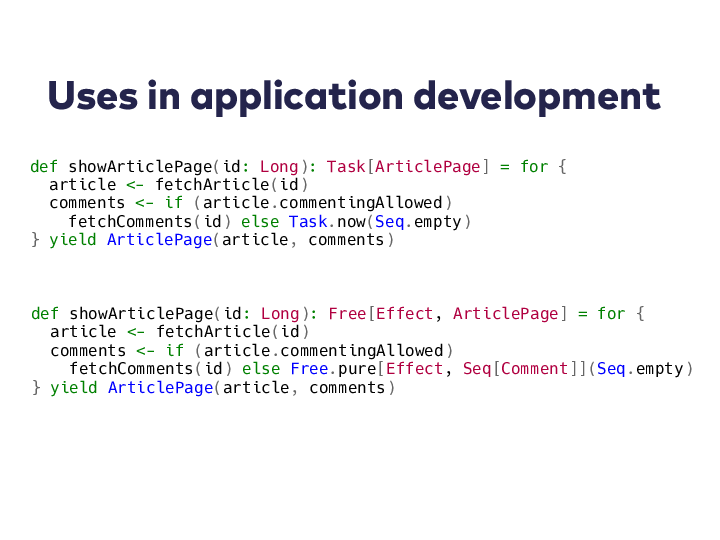

Here is a prime example that pops up in many Scala projects: Many people seem to think that they will be excommunicated from the Church of Functional Programming if they don't use free monads or tagless final. There is this mantra that you must not commit to a specific effect monad too early. So what is a Scala developer going to do? Well, one thing is for sure: They are not going to commit to anything. Instead, they are going to abstract over monads and over many other things — everywhere and all the time.

There are good reasons why you want to abstract over the effect monad when you develop a library. For example, when you provide an HTTP client library, you want to enable people to use it regardless of which effect monad they prefer. Also, if you really have the need to interpret some instructions in different ways in your application, free monads or tagless final are a perfectly reasonable choice. For example, you may want to define a data processing pipeline that and then be able to execute it either in a Hadoop cluster or on a single machine.

However, most of the time when we write applications, such a need doesn't exist. So what other reasons to abstract over the effect monad are there? Well, preparing for the ability to switch from Monix Task to ZIO is a bit like staying away from Postgres-specific SQL because you might switch to MySQL one day. So the main reason that remains for people to abstract over the effect type in application development is the desire to make their effectful code unit-testable.

How does this work? You have a function that mixes logic as well as effects, and in your production code, you execute it in your favourite effect monad, talking to a real external system. In unit tests, on the other hand, you execute it in the Id monad, using hard-coded test data. Object-oriented programmers call this technique stubbing, and they get mocked for doing it, mostly by people like us.

It's important to understand and keep in mind that abstracting over effect monads comes with a cost. For one, there is quite a bit of boilerplate code involved. Some of that is just syntactic noise that is due to the fact that Scala's type inference is quite limited. But there is also the whole interpreter for your algebra, which I'm not even showing. And if your program has some conditional logic, things can get rather messy pretty quickly. Also, by their very definition, monads are strictly sequential. If you want to execute some effects in parallel, because they are actually independent from one another, you will have to abstract over applicatives as well. Getting those to actually execute in parallel in your target effect tyoe, however, is surprisingly easy to get wrong in my experience. Finally, abstracting over effect monads seems to lead people to writing big functions in which logic and effects are completely entangled, because they are still abel to unit test the whole big function using the Id monad.

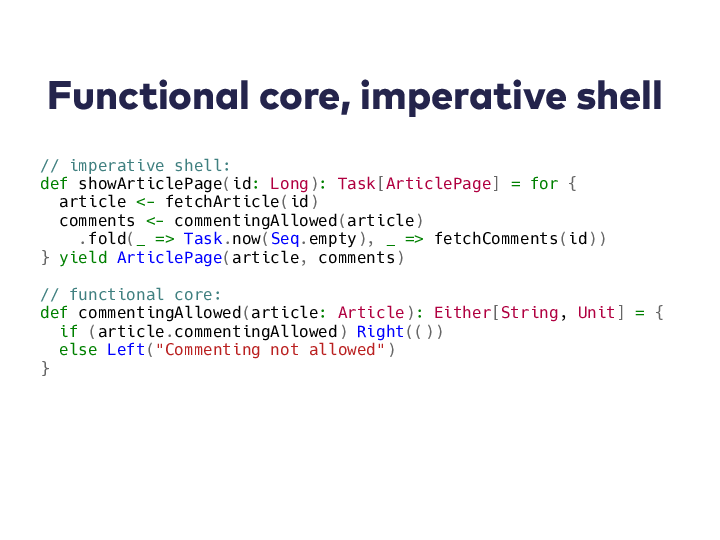

There is an alternative that seems to be almost taboo, which is to not abstract over your effect monad at all in your application code. If you're honest, when testing with the Id monad, you're only testing the few pieces of application logic within your big effectful function and you're verifying that your test interpreter is working as expected. But you have no clue if your production interpreter, which is talking to an external system, is working correctly at all. So abstracting over the effect monad and using the Id monad in tests doesn't even help you to write meaningful tests. If you forego this abstraction, you will be able to use your effect type in all its glory. Among other things, this makes stuff like parallel execution very straightforward.

If you follow this approach, you have to be very strict about separating the logic of your program from the parts involving IO or other effects. One way of doing this is to employ a Functional Core, Imperative Shell architecture. What this means is that you'll move all the logic into the purely functional core. If necessary, you will have to extract it from your big effectful functions. This way, you can minimize the branching happening in the imperative shell and, since you can use the whole functionality of your favourite effect type there.

With all the logic being implemented in rather small, pure, and effect-free functions, it becomes a lot easier to write unit tests for your application's logic. The imperative shell will be almost free of logic, so that it can be covered with only a small number of integration tests. This does a better job at testing what is happening in a production-like environment. Free monads or tagless final are not a great choice if you only care about testability. There are alternatives that are less complex and at least as adequate for achieveing this goal.

So why is it that people jump to certain solutions so quickly? I have the impression that the discourse in our community is characterized by a focus on how to use certain abstractions. At best, we find discussions of when to use one abstraction over another. But we rarely see discussions about when not using any of those abstractions might be a viable solution.

I think we should all talk and write more about the costs of certain abstractions and have critical discussions of the trade-offs we need to make. Abstractions are supposed to help us keep complexity in check, but using them can come with its own complexity as well. Usually, a given abstraction is a very good fit for a few particular use cases. However, there are often alternative solutions that are more adequate for other use cases. These solutions are often both less complex and more boring.

Unfortunately, we don't like boring. We like to take the language and the compiler to their limits. We also like to adopt the latest fad. But the software systems we work on in our jobs should not be a playground or a test bed for the latest fad. So let's talk and write more about when certain abstractions are a good fit and when they are not, and about when a more boring solution might be a better fit.

And when someone in your team tells you, "This problem must be solved with free monads", don't be afraid to ask why. In general, make a habit of demanding to hear why and approach was chosen, which alternatives have been considered, and why they've been discarded. A great technique for documenting these decisions for future reference is to write Architecture Decision Records (ADR).

The last aspect of the complexity trap that I want to discuss is what I call maximum zoom. Have you ever used one of those coin-operated telescopes, or binoculars, in order to zoom in to some detail of a scenic view? Maybe you have also experienced how easy it is to lose your bearings and how difficult it can be to keep track of the broader context. I think the same thing happens a lot in software engineering.

Let me explain this with an example. These days, many teams face the challenge that the system they are working on needs to make a lot of requests to various other systems. Very often, this happens in a Netflix-style microservice architecture, where you have tons of tiny microservices and another service in front of those, orchestrating the others, aggregating their response data and doing some additional processing, for example filtering. One particular pattern in which such an orchestration service occurs is BFF (backend for frontend).

Very often, having to make all these requests to multiple backend microservices leads to high response times for the end user, which is then mitigated by introducing batching and caching of previous results, and also by making requests that are independent from one another in parallel. Imagine we'd be taking care of all these different concerns in the same place. That would certainly not be simple, as in "having one ingredient" — which is exactly why there are libraries that provide abstractions over these concerns, for example Stitch, Clump, or Fetch.

Keep in mind that I don't know anything about the context in which these libraries were created. For all I know, following that approach may have been the only viable solution to the problem the developers were facing in their specific situation, after careful analysis. Nevertheless, in my experience from other projects, the motivation to build abstractions like these is often to solve problems of a Netflix-style microservice architecture as the one I outlined above. As such, it serves as a nice example of the phenomenon that we often implement technological solutions to problems prematurely. I think that the reason why we see this phenomenon is that we don't apply systematic approaches at improving our software systems often enough.

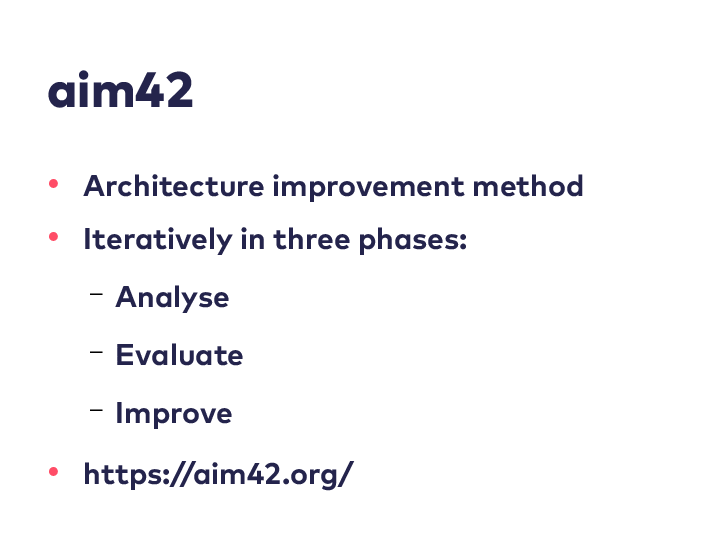

Coming back to our example, it's fair to assume that caching, batching and parallel execution of requests, all nicely abstracted away, probably works kind of okay. However, if we apply a systematic approach at improving our software, we may very well end up with a different solution. One such systematic apporach is called the Architecture Improvement Method (arc42). Using this method, you work iteratively in three phases in order to improve your software system:

- Analyse in order to identify issues and improvements

- Evaluate in order to estimate the value of issues and the effort or cost associated with improvements

- Improve by applying selected improvements

One of the most important techniques in the analysis phase is root cause analysis. Unfortunately, it's also a tremendously underused technique. In my experience, people often jump to solutions too quickly. When we face a problem, we tend to immediately search for a solution for exactly this problem. Root cause analysis differentiates between problem and cause. The idea is to trace a problem to its origin and to identify root causes of symptoms or problems. When doing a root cause analysis, you continue to ask why until you have found the root cause in the causal chain.

Let's apply this to our high latency problem from our example. We could ask ourselves why our latency is so high and get to the answer that it's because of all the requests we need to make in our BFF. But why do we need to make so many requests? The answer could be that it's because we need to fetch data from many different services. And why do we need to do that? Maybe it's because all our services are designed around a single entity. And why is that?

At some point, we realize that we have an architectural problem at the macro level. Maybe, if we continue to ask why long enough, we will come to the conclusion that all these services we need to communicate with exist in exactly this way because of how the company is organized.

In any case, high latency of our BFF is only a symptom, and the root cause, the thing we should try to solve, lies a lot deeper. By making the symptom less painful, we don't reduce complexity in the system as a whole. Think of all the complexity that could be eliminated if we changed our architecture at the macro level — for example, if instead of a service in front of all those tiny microservices, we had a few slightly bigger systems that are not just built around a single entity or aggregate, but a whole Bounded Context. The whole orchestration and aggregation layer would be gone, and browsers would talk to those bigger systems directly, because they are entirely self-contained, including their own UI.

But the maximum zoop trap also applies to our issue with the JSON codecs. If we do a root cause analysis here, at some point, we may ask ourselves why we have a JSON API in the first place. Often, the sole consumer of such an API is a single page application (SPA) running in the browser. While there are perfectly valid use cases for SPAs, they are often chosen merely because it's "industry standard" to use them these days — which brings us back to the trap of embracing industry standards.

Moreover, we are programmers, and we like to write code. Having to build an SPA allows us to write even more code. It's a new playground for doing functional programming — applicative functors in the browser, how amazing is that?

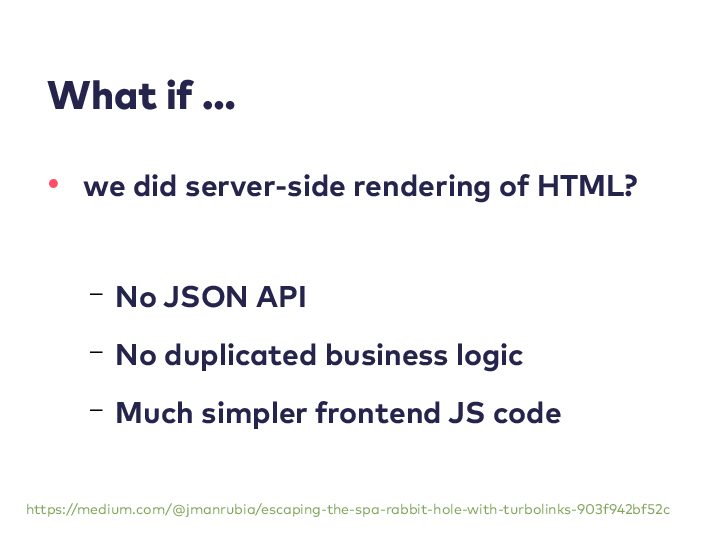

But imagine the unthinkable for a minute: What if we did server-side rendering of HTML instead? There wouldn't be any need to design an evolvable JSON API, which, unlike simply exposing your domain model, is not trivial, and there would definitely be no need for defining any JSON codecs. And that's just what you get on the server side. On the frontend side, you would have one piece of software less to maintain, you'd get faster load times and you could get rid of duplicated business logic. To sum it up, you could reduce the complexity of your frontend JavaScript code a lot.

In the words of Jorge Manrubia, who wrote a great article about how the complexity introduced by single-page applications can often be entirely avoided: "[..] what about if, instead of trying to find a solution, you put yourself in a situation where those problems don’t exist?" Eliminating problems altogether is always better than writing code to solve them, regardless of how purely functional that could is.

But why is it that we fall for technological solutions so often, especially ones that don't reduce complexity at all? I think that maybe we love progrmaming too much. I was recently talking to someone about Rust, and that person said, "A language without higher-kinded types is completely useless!". Yes, certain abstractions are not possible without them, but I have never seen a project fail due to a lack of higher-kinded types. Projects fail because the problem domain is not well understood, or because the architecture is not a good fit for the requirements. They also fail because of how the organisation is structured and because of politics, be it intra- or inter-organizational. Projects fail because of people, and because of people not talking to each other.

When we jump towards technological solutions, we often do this because we love programming. In our case, we love functional programming. We also love technological challenges. Solving a problem by writing code is our comfort zone, so we take this route even if the actual problem is not a technological one. We prefer to work around non-technical problems using technological solutions because that's what we know, and it's the more interesting way out.

Conclusions

I work at a consultancy, and we are often hired to solve challenging technological problems. Often, it turns out that people only think that their challenges are about technology, but the important problems are not technological at all. I believe that as developers, our job is to solve problems and create value for the business and the users of the product we build. Our job is not to write code. Sometimes, doing our job means that we have to search for the root cause of a problem. Instead of solving a symptom by writing code, we have to tackle communication problems, deal with organizational issues, or improve the architecture of our system at the macro level.

I think that jumping towards technological solutions for symptoms also happens because everything that is not solvable with code is often seen as a given, something you can't change anyway. I think it's worth giving it a try more often, whether you are a consultant or not. Things are not always set in stone. And if we are serious about reducing accidental complexity, we should question our self-concept, our idea of what it means to be a software developer.

To summarize, we have to deal with a lot of accidental complexity these days, for multiple reasons. First of all, we adopt techniques and abstractions without analying and evaluating their costs and their usefulness in our specific situation. Moreover, we love solving technological problems.

So let's shift our discourse and talk more about when certain abstractions are a good fit and when they aren't, and let's bring more boring but possibly simpler solutions to the table. Also, don't be afraid to demand explanations for architectural decisions.

Finally, let's slow down when facing a problem. Let's take the time to analyze its root cause and let's jump out of our comfort zone, if necessary — even if it means that we will eliminate the problem and rob ourselved of a chance to solve it.

Credits

- self-contained systems diagram

- Photo by Domenico Gentile on Unsplash

- Photo by drmakete lab on Unsplash

- Photo by Johny Goerend on Unsplash

- Photo by juan pablo rodriguez on Unsplash

- Photo by Mario Azzi on Unsplash

- Photo by Marissa Rodriguez on Unsplash

- Photo by Verena Yunita Yapi on Unsplash

- Photo by Samuel Zeller on Unsplash

- Photo by Markus Spiske on Unsplash

- Photo by Kelly Sikkema on Unsplash

- Photo by Jaciel Melnik on Unsplash

- Photo by Shawn McKay on Unsplash

- Photo by Drew Graham on Unsplash

- Photo by Marcos Gabarda on Unsplash

- Photo by Kylli Kittus on Unsplash